Last week I spent almost 2 days fixing a complex Nintex/SharePoint 2013 issue with one of our customers. The customer was not very big in terms of headcount, but they were using Nintex workflow to automate all sorts of processes(one of the biggest I have worked with).

There were Workflow timer jobs stucking/failing, workflows exiting with errors for no obvious reason and more. The issue was resolved with a couple of fixes and at least the workflows were executed when they should and were ending as they were designed to.

Some of the issues and improvement points I flagged were: not properly scaled Nintex deployment, incorrect service topology, outdated product versions and poor workflow design.

Now, the fourth (poor workflow design) was partially dictated by the inadequate scale of the deployment. They were using a lot Pause and Commit pending changes actions. Many of the workflows were designed to have two minute pause after the first couple of actions.

As maybe you know the pause action actually pauses the workflow instance for the defined period of time, but the workflow will not resume immediately, it will be resumed when the "Workflow" Timer Job is executed. The default schedule of this job is every 5 minutes. This means that you cannot pause a workflow for less than 5 minutes or pause it for exactly the time you have set. You can change the schedule of the Workflow timer job to workaround the first limitation, but this can put additional load on your system.

This is why I demonstrated an alternative of the Pause action that do not pause the workflow instance, but just waits a certain amount of time before continuing the execution. I have not seen this approach in other sources and this is why I decided to share and explain it in this post.

There is another alternative to pause a workflow for less than 5 minutes. It is described in this

article.

As you can see this alternative requires

"NTX PowerShell Action". This is great, but this action is open source, it is deployed with Farm solution and although developed and published by Nintex Employee this addon is not backed and supported by Nintex. The PowerShell action is fantastic, but in my opinion it is not worth to deploy it just to use it as Pause alternative. Also you cannot use it in Office 365(SharePoint Online).

The PowerShell example works by executing the powershell code that will just wait a certain amount of time, then it will continue the execution. Obviously to pause a workflow we need to do some sort of waiting. There is no out of the box action that just waits, as we know Pause action is not doing anything, but actually idling the instance execution at certain point and waits for the timer job to resume it after the time is elapsed. With the powershell example we use the powershell (.Net) framework to achieve wating without doing anything for certain time. The same thing can be achieved with

T-SQL statement execution and in Nintex Workflow both On-Prem. and Office 365 we have "

Execute SQL" action.

If we want to put a wait in our SQL query for two minutes we can use the code below:

WAITFOR DELAY '00:02'

I am not a SQL guy and was surprised to find out that this statement works outside of the SQL Management tools.

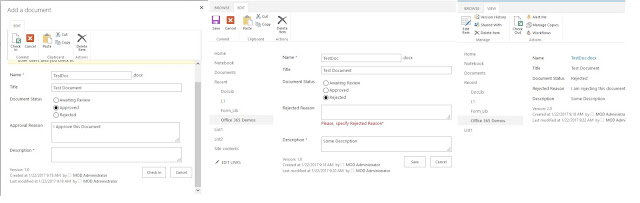

Below is the designer look of my demo workflow in SharePoint 2013. This should also work for SharePoint/Nintex 2010.

As you can see it is pretty simple just for PoC. Below is the configuration of the "Execute SQL" action.

I am using a connection string that is using "SQL" as server, this is alias to the SQL instance that hosts my SharePoint, I am using SSPI security with Windows credential that are actually my farm account saved as global constant.

Below are the details from the execution. You can see that Execute SQL actions took exactly 2 minutes to complete.

Unfortunately you cannot use this approach on-premise for pauses longer than 5 minutes without doing a loop and in this loop execute multiple times delays that are less than 5 minutes. If you do set delay more than 5 minutes the workflow will fail with error

"Error performing database operation. Timeout expired. The timeout period elapsed prior to completion of the operation or the server is not responding."even if you set connection timeout in the connection string to be more than the 5 minute delay. I will do some more tests/research and might report this as bug.

As described in the MSDN documentation of the

WAITFOR statement, it should work against Azure SQL Database. In Nintex workflow for Office 365 we also have

Execute SQL action. I actually tested this and noticed two things, the connection timeout you specified in the connection string will be set to 365 if the number is bigger than that, also if you set a delay longer than 4 minutes you will get some unexpected http errors during the execution, the workflow manager will do a couple of retries and then it will fail. I think that both are issues with the Workflow Manager in SharePoint Online.

This is not so important because the Pause action in the SharePoint 2013 workflows (Workflow Manager) are not depending on SharePoint timer jobs and you will not get the same pause issues as in on-premise 2010 framework, but it is still an option. See example configuration of the action below.

My final words are that this might be extremely useful if you need to put some short pauses(not more than 5 min.) in your on-premise workflows. I hope you find this useful!